à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½ - Making Sense Of Mixed-Up Text

Have you ever opened a document, visited a website, or even just looked at a file name, and found what looks like a secret code staring back at you? Instead of familiar letters, you might see a string of symbols like "Ã", "ã", "¢", or maybe even something that resembles "à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½". It can be quite a head-scratcher, leaving you wondering what went wrong with your words. You are definitely not alone in seeing these character messes, and there are good reasons why they show up, too it's almost a common digital hiccup.

The experience of seeing text that has gone awry, turning into what we often call "garbled characters" or "亂碼" as it's known in some places, is a truly frustrating one. It feels like your computer is speaking a different language, a jumble of characters that hold no clear meaning. Our own experiences show that sometimes a page will display things like "ã«, ã, ã¬, ã¹, ã" right where normal letters should be. This happens even when someone has set up their page headers and database connections, perhaps using UTF-8 and MySQL encoding, which are generally good choices, you know?

When data gets pulled from a big spreadsheet, for example, or from a database, it sometimes comes out looking all wrong. A simple character like "é" can turn into "ã©", which is pretty much a puzzle to anyone trying to read it. These strange character combinations, including things like "à ºà ¸à ¼ Ñ€à µ à ¾à ½", are actually quite common. They are often signals that something is a little off with how computers are handling the way letters and symbols are represented. We are going to talk about what causes this and how to sort it out, because, frankly, nobody wants their text to look like a random assortment of symbols.

- Raspberry Pi Remote Access Ssh Server

- Jaylen Zylus

- How Do I Access My Raspberry Pi From Anywhere

- Piliinay

- Omg The Latest Nvgomg The Latest Nvg

Table of Contents

- What Makes Text Look Like à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½ Anyway?

- Is Your Data Speaking in Tongues? Understanding Encoding Hiccups

- How Can We Untangle These Character Puzzles?

- Tools to Help Fix à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½ and Other Jumbles

- What's the Deal with "Binary ASCII Characters" and à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½?

- How Does à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½ Affect Websites and Documents?

- Avoiding Future Character Mishaps with à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½

What Makes Text Look Like à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½ Anyway?

When your computer shows you "à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½" or other strange character groupings, it's usually a sign of what we call an "encoding problem." Think of it this way: every letter, number, and symbol on your screen is actually stored as a number inside your computer. A character encoding system is just a set of rules that tells the computer which number stands for which character. So, when your system sees a number and tries to show you a letter, but it's using the wrong set of rules, you get a mixed-up character, a bit like trying to read a message written in code without the right key, you know? It's really that simple at its core.

This often happens when text is created using one set of rules, say, for example, a specific kind of encoding, and then it's opened or displayed using a different set of rules. We've seen this happen quite a lot, where a page might display "ã«, ã, ã¬, ã¹, ã" instead of the letters that were originally intended. It's almost as if the computer is trying its best to show something, but it's just guessing at what the numbers mean. This issue is quite common, especially when you're moving information between different systems or programs. It's a fundamental mismatch, so it is.

One very typical example is when a character like "é", which is quite common in many languages, gets changed into something like "ã©". This specific transformation is a classic sign of text that was originally encoded in a format like UTF-8 being interpreted as if it were in a different, older format, such as Latin-1 or ISO-8859-1. The computer sees the UTF-8 byte sequence for "é" and, when told it's Latin-1, it then tries to display those bytes as two separate Latin-1 characters, which then appear as those odd symbols. It’s a very common mix-up, actually.

Is Your Data Speaking in Tongues? Understanding Encoding Hiccups

It's a common scenario: you've got a really big spreadsheet file, full of important written details. Everything looks fine when you put the information in. But then, when you try to get that information back out, maybe to use it somewhere else, all of a sudden, there's a problem with how the characters look. This is often described as an "encoding problem," and it's a bit like having your data speak in a strange, unreadable way. The original text was probably fine, but somewhere along the line, the system that was supposed to interpret the characters got its wires crossed, so to speak.

The core of this issue comes down to character sets and how they're used. UTF-8 is a widely accepted way to handle text because it can represent almost any character from any language, which is quite useful. But older systems or programs might still be set up to use different, more limited character sets, like ASCII or various ISO encodings. When you mix these up, the results can be rather messy. It’s like trying to read a book that’s written in one alphabet with a key from a completely different alphabet; you just won't get the right words, will you?

Our experience shows that these problems pop up in specific situations. For instance, when you're moving data from one database to another, or from a database to a spreadsheet, or even just from one part of a program to another. If the encoding isn't kept consistent throughout that whole process, you're likely to see those unwanted symbols. It's a chain reaction, really, where one wrong step in the character interpretation process can lead to all sorts of visual confusion on your screen. So, it's about making sure everyone is on the same page, character-wise.

When à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½ Shows Up in Files

You might open a text file or a document, and there it is: a string of characters that makes no sense, perhaps even the distinct "à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½". This happens because files themselves don't inherently know what encoding they're supposed to be in. It's up to the program opening the file to guess, or to be told, which set of rules to use. If the program guesses incorrectly, or if the file was saved with an encoding that the program isn't expecting, you get these strange symbols. It's a bit like trying to play a record on a cassette player; the format just doesn't match, you know?

A common scenario involves files that might have been created on one operating system and then moved to another. Different systems might have different default encodings. For example, a file made on an older Windows system might default to a specific "code page" that's different from the UTF-8 that a Linux system or a modern web server might expect. When that file is then opened on the new system, the text gets scrambled because the interpretation is off. This is actually a very frequent cause of these character jumbles.

Even within the same system, if a text editor saves a file without specifying its encoding, or if it saves it with a less common encoding, other programs might struggle to read it correctly. This is particularly true for documents that contain special symbols or characters from languages other than English. When "à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½" or similar sequences appear, it's a strong signal that the program trying to display the text is using the wrong key to unlock the true meaning of the characters within the file. It's a simple case of miscommunication between the file and the reader, really.

How Can We Untangle These Character Puzzles?

When faced with text that looks like a puzzle, such as "à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½", figuring out how to make it readable again can feel a little tricky. The good news is that there are often clear paths to follow to sort things out. Our experiences show that there are typical problem scenarios, and for each of these, there are ways that can help. It's about identifying where the breakdown in communication happened, and then applying the right fix, you know?

One of the first things to consider is the source of the text. Did it come from a website, a database, a document, or an email? Knowing the origin can give you clues about what kind of encoding might have been used initially. For example, if it came from an older system, it might be using a legacy encoding. If it's from a modern web application, UTF-8 is a very likely candidate. This initial detective work is quite helpful in narrowing down the possibilities, honestly.

Next, you often need to look at the settings of the program or system that is *displaying* the text. Is your web browser set to automatically detect character encoding, or is it defaulting to something specific? Is your text editor trying to open every file as if it were in a certain format? Sometimes, simply changing the display encoding setting in your software can instantly make the garbled text readable. It's a quick fix that sometimes works wonders, actually. We've seen this sort of thing save a lot of headaches.

Tools to Help Fix à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½ and Other Jumbles

Luckily, you don't always have to figure out these encoding puzzles all by yourself. There are some really handy tools and resources out there that are specifically made to help untangle text that has gone awry, including those strings that look like "à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½". One notable example is a library called 'ftfy', which stands for "fixes text for you." It's quite good at making sense of text that seems broken. It can even deal directly with files that are all mixed up, not just individual bits of writing, which is a very practical feature.

This 'ftfy' tool has functions like `fix_text` and `fix_file`, which are designed to automatically detect and correct common encoding mistakes. It's a bit like having a smart assistant that can look at the jumbled characters and figure out what they were supposed to be, based on common patterns of corruption. This can save a lot of time and frustration when you're dealing with a lot of messy data. It's a pretty useful thing to have in your digital toolkit, really.

Beyond specific programming libraries, there are also online resources that can help. Some websites offer "online garbled code recovery" services, where you can paste in your mixed-up text, and they will try to convert it back to something readable. There are also "Unicode Chinese garbled code quick check tables" and similar reference materials. These tables can help you look up specific strange character combinations and understand what they might represent, which is quite helpful when you're trying to diagnose the problem yourself. They are like maps for confusing characters, so they are.

Why à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½ Needs a Helping Hand

When you see characters like "à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½" appear, it's not just a minor annoyance; it often means that the information itself is not being communicated properly. This can have real consequences, especially if the text is part of something important, like product descriptions on a website or critical data in a report. If the text is unreadable, then the message is lost, which can lead to misunderstandings or incorrect actions. It really does need to be sorted out for things to work as they should.

Imagine a customer trying to read about a product online, but the description is full of "Ã, ã, ¢, â ‚" and other strange symbols. They won't be able to understand what they're looking at, and they'll likely just leave. This means lost opportunities and a poor experience for anyone trying to use your digital content. So, fixing these character problems is about more than just tidiness; it's about making sure your information is clear and accessible to everyone who needs to see it. It's about clear communication, honestly.

Tools that provide a helping hand for "à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½" and similar issues are valuable because they automate a process that would otherwise be very tedious and prone to human error. Trying to manually fix every instance of garbled text in a large file would take an enormous amount of time and effort. These tools act like a safety net, catching and correcting the character mistakes before they can cause bigger problems. They make the digital world a little more predictable and a lot less frustrating, which is a pretty good thing.

What's the Deal with "Binary ASCII Characters" and à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½?

When people talk about "binary ASCII characters table" or "special characters codes," they're referring to the very basic way computers store information. At its heart, all data on a computer is just a series of zeros and ones – binary code. ASCII (American Standard Code for Information Interchange) is one of the oldest and most fundamental systems for assigning a unique number to each letter, number, and common symbol. For example, the letter 'A' might be represented by the number 65, which in binary is a specific string of zeros and ones. This foundational system is pretty much everywhere, in some form or another.

The problem arises when systems try to interpret these binary numbers using the wrong character map. If a system expects a simple ASCII character, but it receives a sequence of bytes that actually represents a more complex character from a different encoding like UTF-8, it will try to display those bytes as if they were individual ASCII characters. This is often what leads to the appearance of strange symbols, including patterns that look like "à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½". It's the computer trying to make sense of something it doesn't quite understand, using the only rules it knows, which can be a bit of a mess.

For instance, some characters, especially those outside the basic English alphabet, require more than one byte of data in modern encodings like UTF-8. When an older system, or one configured incorrectly, reads these multi-byte characters as if they were single-byte ASCII characters, it breaks them apart and displays each byte as a separate, often unreadable, symbol. So, what you see as "à ºà ¸à ¼ Ñ€à µ à ²à ¾à ½" is literally the raw numerical values of a character being misinterpreted and shown as distinct, incorrect characters from a different encoding scheme. It's a direct result of a mismatch in how the binary data is being read and turned into something visible.

How Does à ºà ¸à ¼ Ñ€à µ à ²à ¾

- 18 If%C3%A5%C3%BFa Sotwe

- Bob Saget First Wife

- Pilipinay

- Nita Bhaduri Howard Ross

- Is Lena Miculek Still Married

Office Desks FURDINI CT 3534A, | Komnit Express

7,927 Bell Pepper Drop Images, Stock Photos & Vectors | Shutterstock

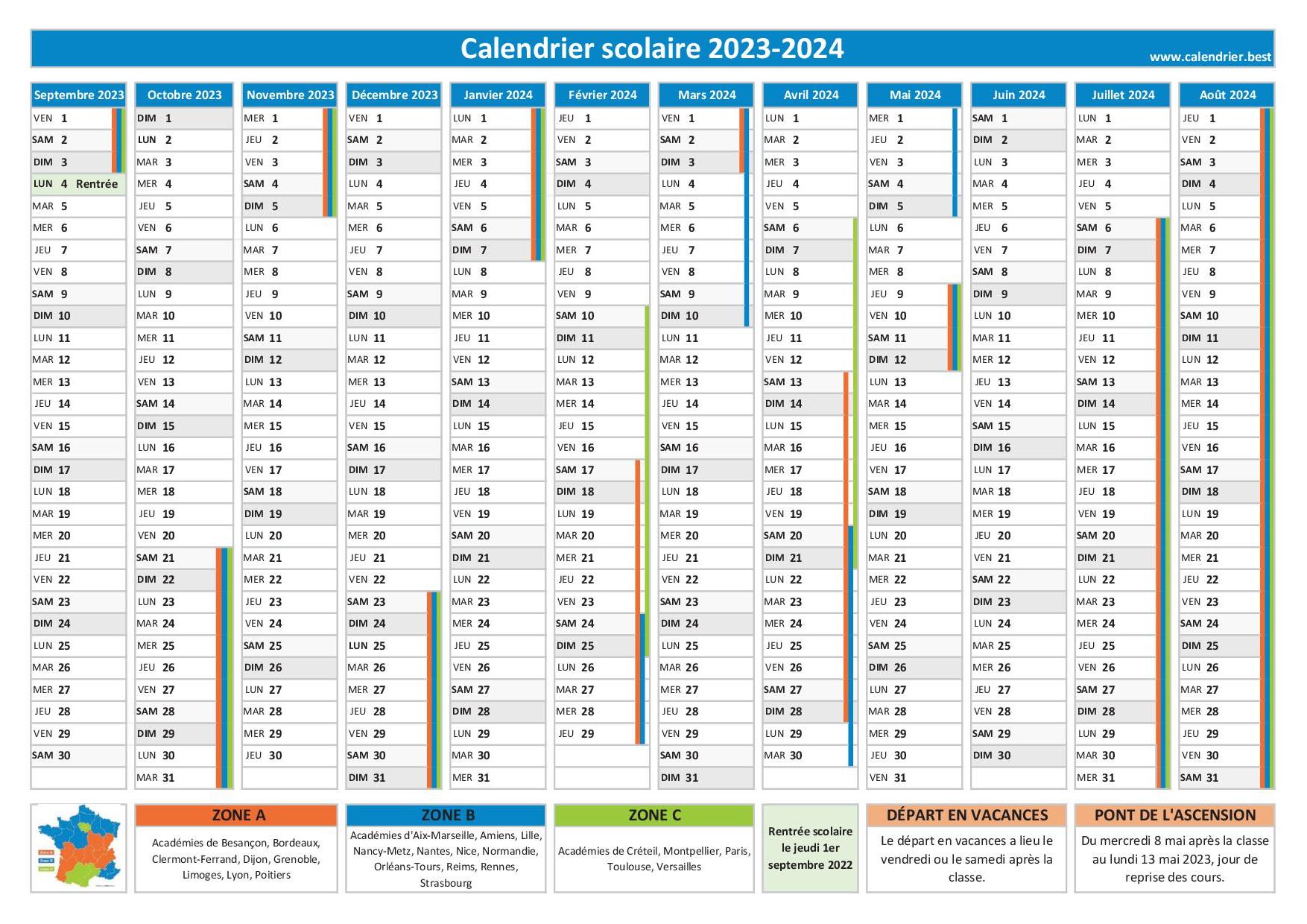

Calendrier Scolaire 2023 2024 Csp - Image to u